SQLServerAsyncExecProcedures

Introduction

On many occasions, the processes or statements programmed in T-SQL do not require a sequential execution, that is, a statement does not need the results of the previous statement. This is the case of the creation of indexes, or the transfer of information from one database to another.

Insert Bulk Bestia offers, under certain types of licenses, a library with a set of stored procedures that allow defining the jobs to be carried out in parallel and how to carry out such jobs.

That said, it should be noted that the execution of processes in parallel within a SQL Server Domain is not easy. Adding to this the possibility of using transactions turns out to be an arduous and complex task, since SQL Server transactions do not allow the execution of processes in parallel. For this, an artifice has been assembled that can be useful in most cases, but which does not cover all the possibilities of error.

However, as will be seen in the next chapter, with determination, patience, and a lot of trial and error, it is possible to define processes that recover from failures. However, nowadays, with the policies of substituting smart and expensive personnel for cheap personnel, more powerful machines and more dumb than intelligent tools, it is very difficult to come up with this type of solution. So for those of you with a healthy curiosity and limitless learning ability, this chapter and the next are offered. Just apologize to the readers for my poor explanations, and encourage them to help with their comments to improve them and even improve the process. Do not hesitate to use the page Contact.

Database Help

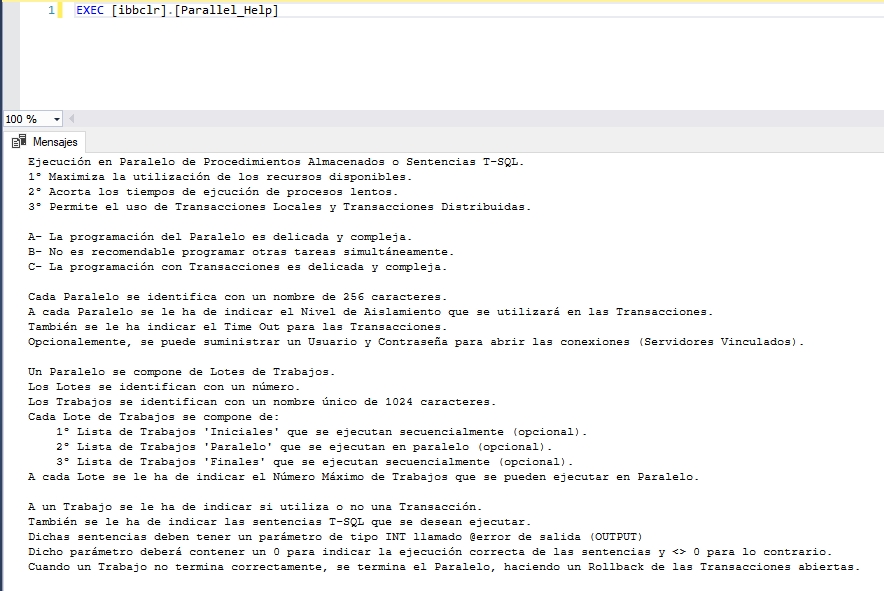

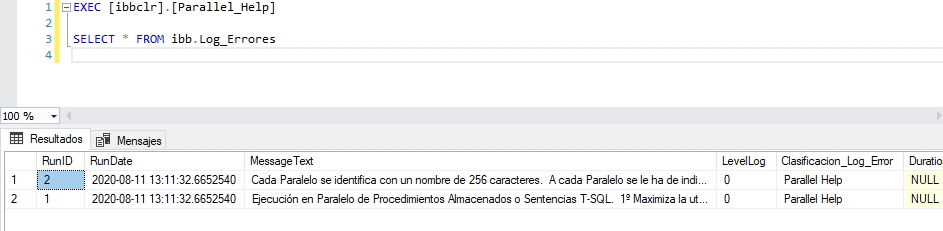

First, indicate that all the stored procedures belonging to this library have their corresponding replica terminated with the prefix "_Help" that show a brief help in the "Messages" panel in the SSMS Results window and in the table 'ibb. Log_Errors'.

ibbclr.Parallel_Help

'SQLServerAsyncExecProcedures' Strategy

The following figure outlines the organization that 'SQLServerAsyncExecProcedures' makes of the jobs to be executed.

Parallel Structure - 1

Parallel

A "Parallel" is an independent set of "Jobs" to run. 'SQLServerAsyncExecProcedures' can store a list of 'Parallels' and the user will decide when each 'Parallel' is executed.

A 'Parallel' is identified by a unique 256 character name. This name will be the one that appears in the 'Classificacion_Log_Error' field of the table 'ibb.Log_Errores' when insert a record that affects the 'Parallel'. During execution, it is in this table where the traces and errors that could be produced in the library will be inserted. This does not mean that if errors occur in the statements that are executed, they appear in that table. This will depend on whether in these sentences an error control is made and these are dumped to said table.

Adding that it is absurd to try to run two or more 'Parallels' in parallel, since a 'Parallel' is supposed to be squeezing the most of the SQL Server instance's resources.

When the execution of a 'Parallel' has finished, it will disappear from the list of parallels. If the 'Parallel' has been executed correctly, it will return a 0, otherwise a value other than 0.

Finally, it should be noted that, if an SQLCLR problem appears, such as execution freezes or enters an infinite loop, it is possible to download and reload the AppDomain by executing the following instructions:

ALTER ASSEMBLY[SQLServerAsyncExecProcedures] WITH PERMISSION_SET = EXTERNAL_ACCESS

ALTER ASSEMBLY[SQLServerAsyncExecProcedures] WITH PERMISSION_SET = UNSAFE

Execution Options for a Parallel

These options are set with the stored procedure 'ibbclr.Parallel_SetOptions'. The Transactions will be discussed later, although the parameters associated with the Transactions for a 'Parallel' are described here.

The parameters that the stored procedure supports are:

- parallelName: NVARCHAR(256). Mandatory. Identifier of the 'Parallel'. String of 256 characters maximum.

-

transactionLevel: NVARCHAR(20). Mandatory. Transaction Isolation Level identifier.

String of 20 characters maximum. Possible values are:

- 'Serializable' Volatile data can be read but not modified, and no new data can be added during the transaction.

- 'RepeatableRead' Volatile data can be read but not modified during the transaction. New data can be added during the transaction.

- 'ReadCommitted' Volatile data cannot be read during the transaction, but can be modified.

- 'ReadUncommitted' (Default) Volatile data can be read and modified during the transaction.

-

'Snapshot' Volatile data can be read. Before a transaction modifies data, it verifies if another

transaction has changed the data after it was initially read.

If the data has been updated, an error is raised. This allows a transaction to get to the previously committed value of the data.

Transactions with IsolationLevel Snapshot cannot be promoted. - 'Chaos' The pending changes from more highly isolated transactions cannot be overwritten.

- 'Unspecified' A different isolation level than the one specified is being used, but the level cannot be determined. An exception is thrown if this value is set.

- maxTimeOutTransaction: INT. Mandatory. Time in minutes. When you run a Job on a Transaction, the Transaction opens and remains open until all Jobs are completed. You must define a value such that it is higher than the estimated time the process will take from the opening of the first Transaction until the end of the Parallel.

- user: NVARCHAR(512). User. Optional. User with which the connections to the Database will be opened. May be required to use Linked Servers.

- pwd: NVARCHAR(512). Password. Optional. Password for the User.

- srv: NVARCHAR(512). Server. Optional. Server or IP address in case there are problems with the connection string.

- bd: NVARCHAR(512). Database. Optional. Database in case there are problems with the connection string.

- connectTimeout: INT. Optional. Timeout in seconds before an error occurs when establishing the connection. By default it is set to 60 seconds.

The usefulness of these last parameters will be seen in the next chapter, where an example of information transfer between linked servers is detailed.

Batch of Jobs

A job batch is simply a grouping of jobs that runs with the same degree of parallelism. Batches are identified with a number and when 'Parallel' is executed the batches are executed sequentially in ascending order.

With the stored procedure 'ibbclr.Parallel_SetOption_MaxTaskBatch' you define the degree of parallelism of a certain batch. The parameters are:

- parallelName: NVARCHAR(256). Mandatory. Identifier of the 'Parallel'. String of 256 characters maximum.

- batch: SMALLINT. Mandatory. Work Lot Identifier.

- maxCurrentTask: TINYINT. Mandatory. Value between 1 and 64. Maximum number of Parallel Jobs to be executed for the Lot.

A 'maxCurrentTask' similar to the number of processors assigned to SQL Server is recommended.

It is recommended to specify OPTION (MAXDOP 1) in the Querys of each Job that are executed in Parallel. As they are processes that are going to run in parallel, each processor is going to be very busy with a certain process. In this way, each processor is assigned its Work, avoiding CPU blocks.

OPTION (MAXDOP 1) is practically essential in MERGE statements, since the parallelization of this type of Querys will block the CPU.

Groups of Jobs

Each Lot groups the Jobs to be carried out in three groups:

- Iniciales: Optional. Group of Jobs to be executed at the beginning of the Batch. The sequence followed is the order in which they were defined.

- Paralelo: Optional. Group of Jobs to be executed in parallel. The startup sequence of the jobs follows the order in which they were defined, so it is recommended to put the jobs with the longest duration in first place, since this way, in parallel, the jobs with less duration will be executed. There will be as many jobs running as indicated in 'maxCurrentTask' for the Batch they belong to.

- Finales: Optional. Group of Jobs to be executed at the beginning of the Batch. The sequence followed is the order in which they were defined.

Job

A job is the set of statements that you want to execute. It is defined by the stored procedure 'ibbclr.Parallel_AddJob', whose input parameters are:

- parallelName: NVARCHAR(256). Mandatory. Identifier of the 'Parallel'. String of 256 characters maximum.

- batch: SMALLINT. Mandatory. Batch Jobs Identifier.

- grupo: NVARCHAR(15). Mandatory. Group within the Lot to which you want to register the work. Allowed values are: 'Iniciales', 'Paralelo' o 'Finales'.

- jobName: NVARCHAR(1024). Mandatory. String of 1024 characters maximum. Job Identifier. It must be a unique name within the Parallel. This name will be the one that appears in the field 'Classification_Log_Error' of the table 'ibb.Log_Errores' when inserting a record that affects the 'Job', be it a trace or an error message.

- withTransaction: BIT. Mandatory. 1 The job has its own Transaction. 0 The Job has no Transaction.

-

jobTSQL: NVARCHAR(MAX). Mandatory. T-SQL script to be executed.

The result of the execution must be returned in a parameter called @error of type INT and output.

- = 0 It should indicate that the Job was successful. At the end of the Parallel execution, if all the Jobs have been executed correctly, a Commit of the open Transactions will be made.

- != 0 It should indicate that Errors have occurred in the Job. It will cancel the Jobs that are in execution, suspend the execution of the rest of the jobs and a Rollback of the open Transactions will be made.

Each Job runs on a new connection to the Database. It is the way in which Insert Bulk Bestia parallels the 'Jobs'. The following options are established for all connections:

SET ANSI_DEFAULTS OFF;

SET ANSI_WARNINGS, ANSI_PADDING, ANSI_NULLS,

ARITHABORT, QUOTED_IDENTIFIER, CONCAT_NULL_YIELDS_NULL ON;

SET NUMERIC_ROUNDABORT, CURSOR_CLOSE_ON_COMMIT,

IMPLICIT_TRANSACTIONS OFF;

SET REMOTE_PROC_TRANSACTIONS, XACT_ABORT ON;

The last two options, SET REMOTE_PROC_TRANSACTIONS, XACT_ABORT ON, are practically essential when using Linked Servers, since, on the one hand, local transactions to SQL Server will become distributed transactions, and on the other, it will be necessary that if a error in the T-SQL code will reverse the transaction.

Although these options are added by default when opening the connection, they are highly dependent on the work to be done, the desired behavior, the Transactions (local and / or distributed) and the errors. The SET REMOTE_PROC_TRANSACTIONS ON option will depend on how you want Distributed Transactions with MS DTC to behave. Thus, it is recommended to carry out different tests (with and without caused errors) until the appropriate configuration is found.

Parallel Remove

'ibbclr.Parallel_Remove' it simply removes from the list of 'Parallels' the 'Parallel' indicated in its input parameter.

Transactions

As previously mentioned, an artifice has been mounted to handle Transactions, since SQL Server does not allow the execution of processes in parallel within a transaction. This artifice can be useful on certain occasions, but what is really useful is the definition of an algorithm that allows the database to recover from failures. In the next chapter shows an example that uses this technique.

If, in addition to the restrictions imposed by SQL Server for Transactions, it is added that these can "promote" from local transactions to distributed transactions without the programmer being able to control it, the complexity of their treatment, programming and tests grows exponentially.

This section tries to explain in a simple way the treatment that Insert Bulk Bestia does with transactions.

Opening the Connections to the Database

As noted above, each job runs on a separate connection. Each new connection has certain characteristics, of which the main ones are listed below:

- Database and Server: Those indicated in the 'Parallel' options or, if nothing is indicated, the Database and Server where the 'Parallel' is executed.

- Username and Password: Those indicated in the 'Parallel' options or, if nothing is indicated, the option 'IntegratedSecurity' = true.

- Pooling: False. Connection pooling is not used due to a Microsoft bug: When a connection is released and goes to the Pool of connections, it stays with the IsolationLevel established, in such a way that, if you want to change the isolation level for a new connection, taking the connection from the Pool does not change the isolation level Instead, he keeps the one he had.

- Enlist: False. If a job has been defined to be executed within a Transaction, firstly, the transaction is created using the "System.Transactions" library with the desired isolation level and Timeout characteristics. Subsequently, the connection to the database is created and opened, and finally said connection is registered in the previously created transaction.

- MultipleActiveResultSets: True

- ConnectRetryCount: 10

- MultiSubnetFailover: True

- ConnectTimeout: Value of the 'connectTimeout' parameter of the 'Parallel' options

Given that "System.Data.SqlClient" only allows working with transactions local to the instance where the 'Parallel' is executed, we have chosen to use the "System.Transactions" library to be able to work with Distributed Transactions.

With "System.Transactions" you create a scope ('TransactionScope') that allows asynchronous execution ('TransactionScopeAsyncFlowOption.Enabled'). This scope is associated with a Transaction, which is the one used to execute the job. In this way, the execution of the jobs in parallel is achieved.

Treatment of Transactions

When the 'Parallel' is executed, all batches are executed in ascending order according to their identifier. In each batch, the 'Initial', 'Parallel' and 'Final' jobs are executed in that order. The Transactions of those jobs marked to be executed within a transaction, are stored in a list waiting for a commit or rollback.

If all Jobs ran successfully, all open Transactions will be committed. Whereas, if a job fails, all the jobs that are running will be canceled, no more jobs will be executed, and the transactions that are open will be rolled back.

For this reason, it is vitally important that the 'maxTimeOutTransaction' parameter covers the entire duration of the 'Parallel', since, otherwise, the transaction manager will begin to cancel them as the Transactions exceed this value.

One of the problems that Distributed Transactions often have is that they "fail more than a fairground shotgun." Thus, a correct design of the desired algorithm is essential so that the time that the transactions remain open is the minimum possible, at the same time that a minimum use of them is also recommended due to their complexity and unreliability.

Finally, it should be noted that, depending on the Isolation Level, the Jobs will be able to view/modify the 'Volatile data' of the other Jobs.

RECOMMENDATIONS: Think, code and test. Rethink, recode, and retest. And so on until the desired results are obtained. Use the 'ibb.Log_Errores' table and the 'ibb.Log_Errores_Inserta' stored procedure within the statements to be executed, as they will make debugging the process extremely easy.